Rameem Siddiqui

An Open letter is being called for that emphasizes putting a 6-month pause on ‘giant AI experiments’ attracting the signatures from the likes of Tesla CEO Elon Musk, Apple co-founder Steve Wozniak, author and political scientist Yuval Noah Harari advocating for a 6-month moratorium to give AI companies and regulators time to formulate safeguards to protect the society from potential risks of technology.

In the 1940s, researchers and scientists in what would later become known as “artificial intelligence” started toying with a tantalizing idea: What if computer systems were designed like the human brain? The human brain is made up of neurons, which send signals to other neurons through connective synapses forming a neural circuit. The strength of these neural connections can grow or wane over time. Connections used frequently become stronger and the neglected ones wane out. Together these connections make up our judgments and instincts and our very true selves. In 1958,Frank Rosenblatt pulled a concept of a simplified brain, trained to recognize patterns and reproduce itself along assembly lines. Rosenblatt didn’t fail; he was simply early and way ahead of his time.

This technique now called “deep learning” started significantly outperforming other approaches, to computer vision, precision, prediction, translation and the like. The neural network-based AI has been smashing the contemporary competing forces in every other field including computer vision, translation, video games and even Chess. Machine learning systems have become highly competent and researchers claim that they’re “scalable”. The term scalable here refers to the fact that the more money and data into your neural network – the bigger it grows, spending longer on training, harnessing more data and performing better. Even though major tech companies now perform multi-million dollar runs for their systems, no one has come close to realizing the limits and potential risks of this principle.

Ray Kurzweil, one of the imminent futurists, prophesied that computers will have the same level of intelligence as humans by 2029. He states, “2029 is the date I have predicted for when an AI will pass a valid Turing test and therefore achieve human levels of intelligence. I have set the date 2045 for the ‘Singularity’ which is when we will multiply our effective intelligence by a billion-fold by merging with the intelligence we have created.” AI will make most people better off through unprecedented advances in technology however it would also majorly affect what it truly means to be a human in the 21st century.

There are, indubitably, some bright sides to the future of AI, including precision medicine that can improve a person’s genetics, and diagnosis with more precision to treat disease and make treatments easier, accessible and more affordable. Virtual assistants like Siri, Alexa and other programs are making mundane activities for humans hassle-free contributing to a more relaxed lifestyle. Software like Chat GPT is helping with data and language processing by answering questions in a human-like manner and solving complex questions on a variety of topics.

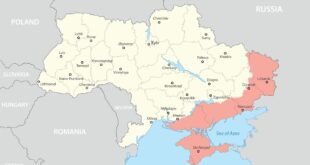

The dark side of AI inculcates certain inevitable risks that revolve around the way AI and its mechanisms are being used including mass surveillance, data abuse and the destruction of digital privacy. Such digital surveillance is already underway in China’s social credit system that expands surveillance to all parts of a citizen’s life. Modern warfare being powered by automated techniques is another major worry as warfare supremacy will be dictated by advances in technology. One of the supreme concerns relating to the rise of AI is its ability to replace human jobs and eventually human competency from the socio-economic landscape. The efficiencies and accuracy of machine learning models can easily surpass human intelligence, widening economic inequalities, social upheavals and populist uprisings.

Technological advancements in AI have galloped since the invention of software like Chat GPT, from the Microsoft-backed company OpenAI, and the company has recently released Chat GPT and GPT-4, two text-generating chatbots, that are causing a frenzy among certain AI specialists. The seemingly harmless chatbots are modelled on the “generative” ability to mimic human-like responses, combined with the speed of adoption and adaptability, which has caught many off guards as the major tech giants around the world are racing to build generative AI into their products. This alarming situation has been realized by scientists, researchers, and CEOs of tech companies. An open letter is being called for that emphasizes putting a 6-month pause on ‘giant AI experiments’ attracting the signatures from the likes of Tesla CEO Elon Musk, Apple co-founder Steve Wozniak, author and political scientist Yuval Noah Harari, Skype co-founder Jaan Tallinn, politician Andrew Yang, and many others.

The open letter says that the current race dynamic of AI is dangerous as AI labs are locked in an out-of-control race to develop and deploy learning systems that no one – not even the creators, can understand, predict, or control. It advocates for a 6-month moratorium to give AI companies and regulators time to formulate safeguards to protect the society from potential risks of technology. “This pause should be public and verifiable, including all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium”, stated the open letter. A potential threat emerging from the AI systems is that of data abuse and disinformation engines, violating data privacy. misleading people with fabricated information and perpetuating systemic bias in the information that eventually surfaced to the people.

The marvel of these AI models and software is not their own to claim, rather they are supported and built on the backbone of the labor of workers that toil under poor conditions to label and train these systems. A TIME investigation has reportedly found that OpenAI, Chat GPT’s creator, used outsourced Kenyan laborers earning less than $2 per hour, in the quest to make Chat GPT less toxic. Some technologists have warned about deeper security threats. These digital assistants that interface with the web, read and write emails could offer new opportunities to hackers, jeopardize tonnes of personal information as well as confidential state secrets. It would be an easy task for hackers to manipulate and exploit software in an attempt to access the data stored in them since the AI softwares lacks adequate safeguard protocols.

There are no bounds to what ascents human ingenuity can achieve. AI is one such invention that could and probably is unfathomably destructive. It is alarmingly dangerous because the day could come when it is no longer in our control, as they lack human consciousness and may do something unaligned with human goals, or pose an existential threat. It might seem bizarre, given the stakes that the industry has been left to self-regulate. It is not legal for tech companies to build nuclear weapons on their own, yet tech giants are in a race-like dynamic to develop systems that they themselves acknowledge will likely become more dangerous than the nuclear weapons themselves. The progress in AI has happened extraordinarily fast, leaving regulators behind the ball, with almost no heed being paid to the safety regulations of these AI systems.

Only time will tell, whether the AI advancements in the years to come would prove to be a panacea for world problems, or a Frankenstein monster threatening the existence of humankind, fearing their own creation that they know nothing about.

Geostrategic Media Political Commentary, Analysis, Security, Defense

Geostrategic Media Political Commentary, Analysis, Security, Defense